Create your own stateful widgets using Shortcuts with Control Centre in macOS 26 Tahoe

One of the side effects of using a MacBook with a screen notch for the FaceTime camera has been less menubar real estate. After years of pruning menubar apps and adventures with Bartender replacements, the new version of macOS offered promise for moving items out of the menubar and into the new configurable Control Centre. So far there doesn’t seem to be much third-party app support for Control Centre but I have had success in making my own controls with Shortcuts.

Example: A caffeinate control to prevent the system from sleeping

There’s numerous utility apps to keep the screen on with more or less features but there’s also a built-in caffeinate command. I used it with Swiftbar to make my own menubar control. These controls cannot be moved into the new Control Centre (yet) but I figured it ought to be to possible to do this without third party utilities since Shortcut controls are supported. The only issue was, while a shortcut that toggles a setting or running process is easy enough to create, it is hard to tell the current state of the setting or process. The key was using the Rename Shortcut action so the shortcut can rename itself when it is run so that the title shown in the Control Centre widget updates with the current state.

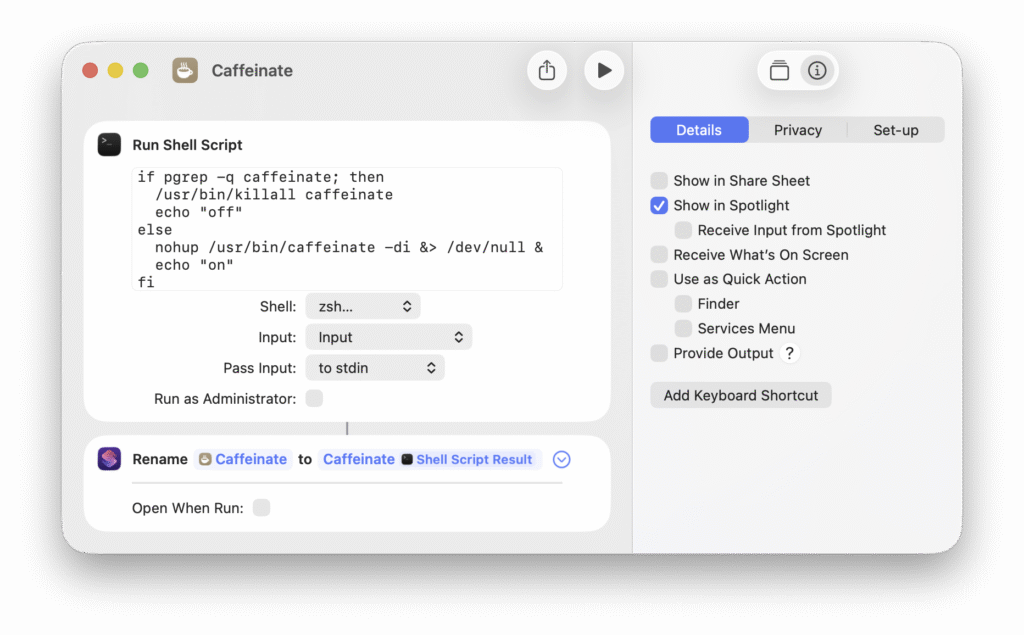

The shortcut only has two actions. A shell script checks to see if caffeinate is running – if it is, stop it, otherwise start it in the background to prevent system sleep:

if pgrep -q caffeinate; then

/usr/bin/killall caffeinate

echo "off"

else

nohup /usr/bin/caffeinate -di &> /dev/null &

echo "on"

fiIn each case, the status is output and passed to the next action which renames the shortcut with the status (be sure to disable Open When Run).

There’s lots of ways this technique could be used and it should also work on iOS and iPadOS. The only caveat might be the need to run the shortcut on login or via Automation (also new in Shortcuts in macOS 26) to update the state in the shortcut name. I’ve already got a toggle for a Hue sensor using the HTTP interface and will inevitably think of a few more now that I’ve got a new tool in my power user toolkit.